System Components and Architecture¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

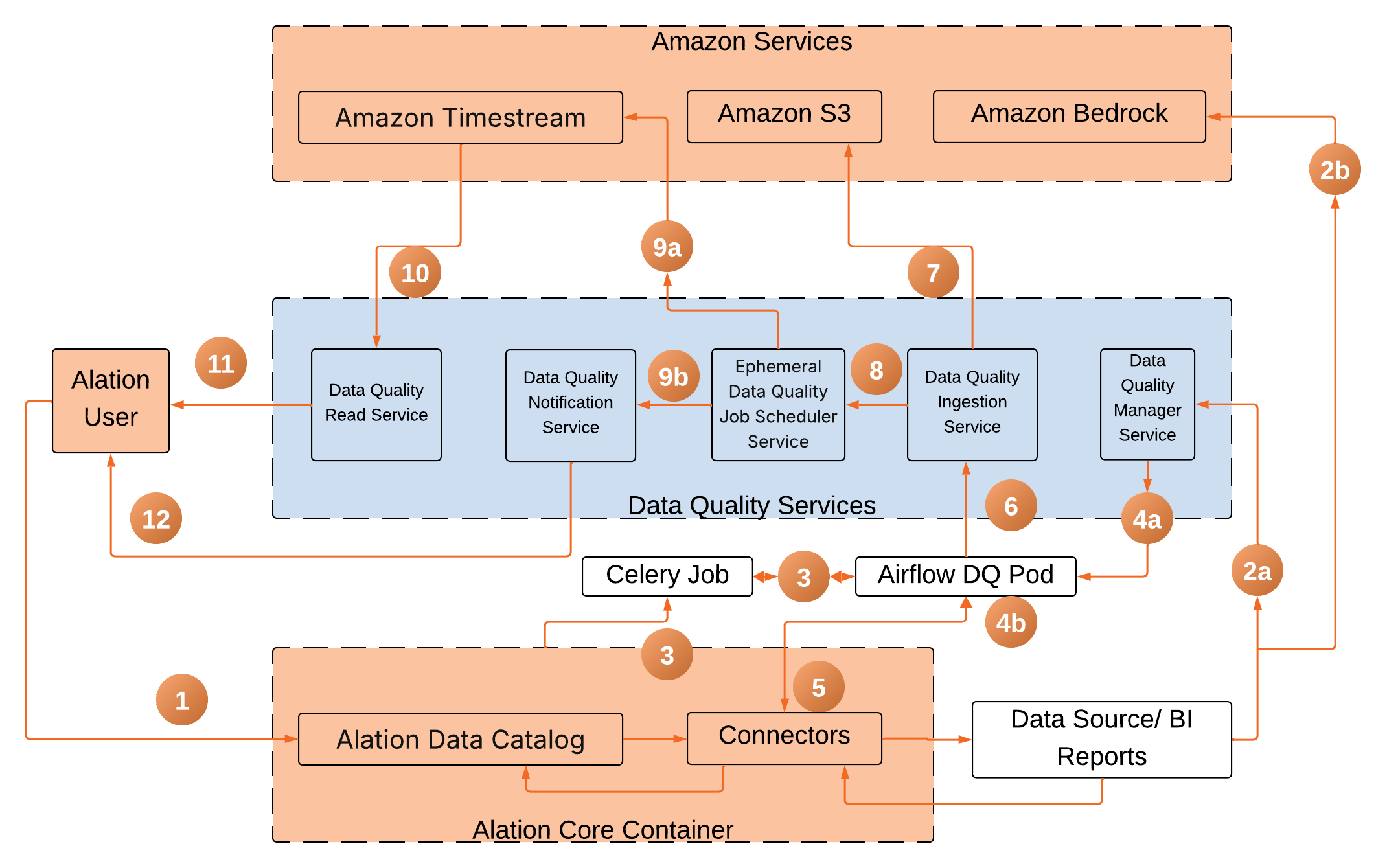

The Alation Data Quality architecture is composed of several key components designed to ensure robust data governance and user experience. It integrates the Alation Core Container for cataloging and user interactions, the Data Quality Manager Service for configuring monitoring, and the Airflow DQ Pod for executing data quality checks. AI-powered recommendations are provided by Amazon Bedrock, while the Data Quality Ingestion Service handles the processing and storage of check results. For historical metrics, Amazon Timestream is utilized, and the Data Quality Notification Service manages all alerts.

System Components¶

The architecture consists of the following key components:

Alation Core Container: Houses the Data Catalog and coordinates user interactions.

Data Quality Manager Service: Stores and manages monitor configurations.

Airflow DQ Pod: Executes data quality checks using pushdown SQL queries.

Amazon Bedrock: Provides AI-powered check recommendations via Claude 3 Sonnet.

Data Quality Ingestion Service: Processes and stores check results.

Amazon Timestream: Time-series database for historical quality metrics.

Data Quality Read Service: Fetches the results from the Amazon Timestream and displays it on the user interface.

Data Quality Notification Service: Manages alerts and notifications.

Architecture¶

Monitor Creation¶

The user begins by interacting with the Alation Data Catalog in Alation Core Container, which contains the metadata of tables and BI reports, to create a monitor on the Data Quality interface. Alation Data Catalog stores the metadata using the metadata extraction (MDE), lineage for the BI reports, and query log ingestion (QLI) from the data source connectors.

Alation Core Container interacts with Data Quality Manager Service:

For manual checks, Alation Core Container sends and stores the monitor information to the Data Quality Manager database.

For AI-driven (Recommend Checks option) checks, Alation Core Container sends the column name and column type to the Amazon Bedrock service using a large language model (LLM) to fetch the recommended checks and then stores the monitor information to the Data Quality Manager Database.

Monitor Run and Schedule¶

When the user runs the monitor, the Alation Core Container creates a Celery job and calls Airflow DQ Pod.

The Airflow DQ Pod fetches

the monitor definition from the Data Quality Manager

the credentials from the data source connectors in the Alation Core Container.

Airflow DQ Pod then creates a pushdown SQL query based on the check rules as defined by the user or recommended by AWS Bedrock and passes it to the Query Service of the data source connectors for execution.

Airflow DQ Pod then fetches the results of the monitor run and sends it to the Data Quality Ingestion service

Data Quality Ingestion service stores the results in the Amazon S3.

Data Quality Ingestion service calls the Ephemeral Data Quality job scheduler service.

The Ephemeral Data Quality scheduler service then

Creates one more pod on demand to store these results based on the timestamp in the Amazon Timestream database.

Send the data to the Data Quality Notification service.

Data Quality Score Presentation¶

Amazon Timestream then sends the results to the Data Quality Read service.

Data Quality Read service sends the results to the user.

The Data Quality Notification service sends the notifications and alerts to the user.