OpenLineage Integration with Apache Airflow (Beta)¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

Overview¶

The integration with Airflow lets Alation consume OpenLineage events from your Airflow environment and make cross-source lineage available under the relevant data sources so that users can trace data movement across pipelines and downstream systems.

Key Behaviors¶

Lineage is formed only from successful OpenLineage events. Failed or incomplete events don’t create lineage links.

Each event must include both input datasets (sources) and output datasets (targets); Alation relies on this to “stitch” lineage together.

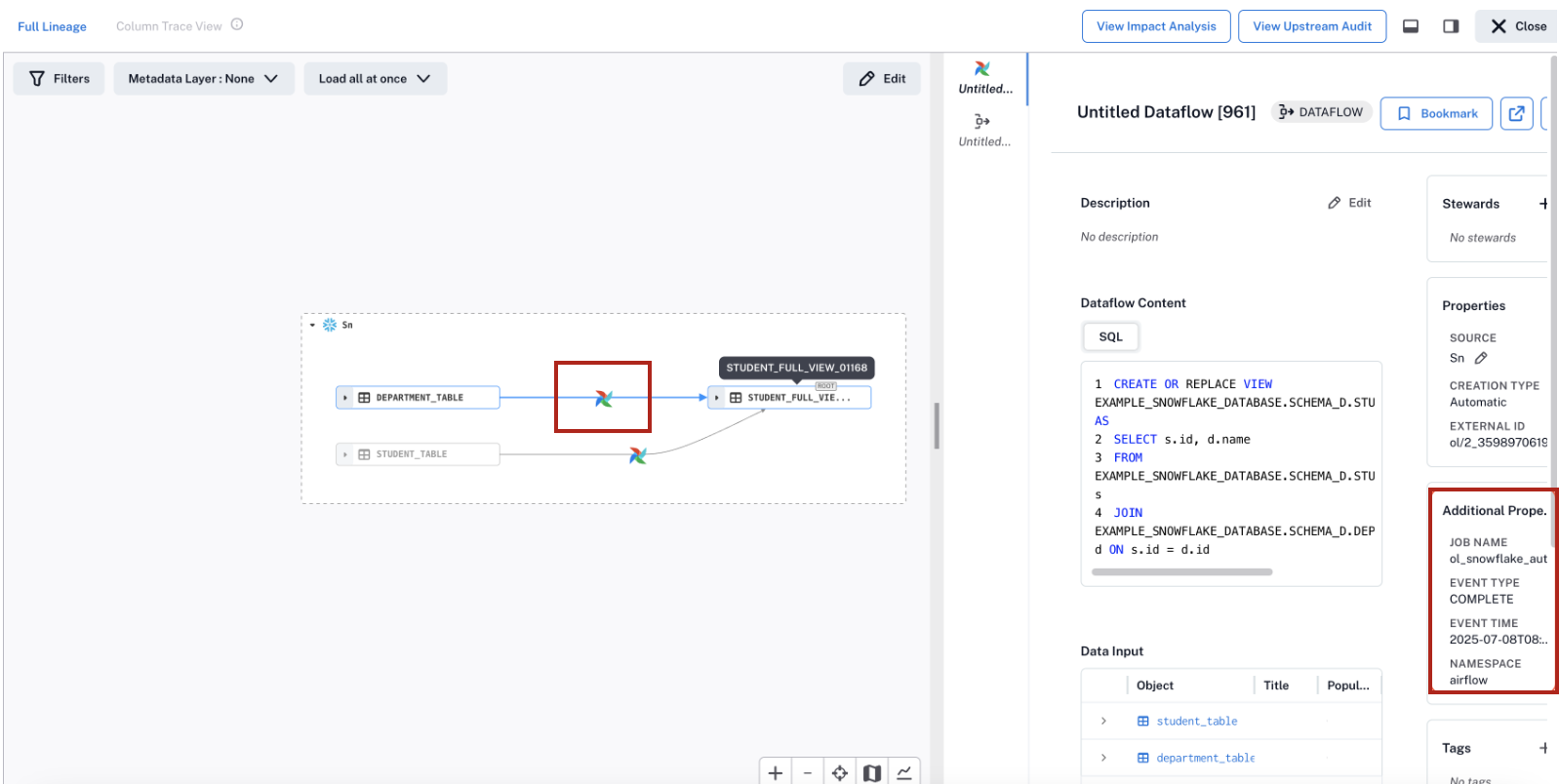

Resulting lineage appears on the Lineage tab of relevant objects in the catalog and can participate in Impact Analysis. The Lineage diagram displays additional details:

Airflow indicators show jobs originating from Airflow

Dataflow details include metadata from the Airflow DAG

The job name

Namespace

Event type

Event completion time

Supported Airflow Environments¶

Supported Airflow Versions¶

Any Airflow distribution that can install and run the official OpenLineage provider. Consult your distribution’s compatibility matrix.

Supported Operators¶

Alation supports all Airflow operators that are compatible with the Airflow OpenLineage provider.

Supported operators: See the Supported operators for the list of operators supported by Alation via the OpenLineage provider.

Validated operators: Alation has specifically validated lineage resolution with the following commonly-used operators:

SnowflakeOperatorPostgresOperatorRedshift operators:

PostgresOperatorSQLExecuteQueryOperator

MySqlOperatorCopyFromExternalStageToSnowflakeOperator(S3 to Snowflake)

Operators not listed in the validated set remain compatible with Alation, provided they are supported by the Apache Airflow OpenLineage provider.

How the Integration Works¶

Your Airflow deployment emits OpenLineage events during task execution via the OpenLineage provider.

Events are sent over HTTPS to your Alation ingestion endpoint and include:

job run context

namespace

inputs

outputs

Alation processes events and builds lineage links between sources and targets it discovered.